Is it possible to download a complete webpage (like through “save as” in the browser) without opening it in the browser. So if i have a link for a webpage I can download it (with pics and css files) without opening. Thank you!

I don’t have much shell script experience, so I can’t say this with 100% certainty, but I think it should be possible to accomplish this with curl. Try

curl [URL] > ~/Downloads/test.html

either in Terminal or in an “Execute a Shell Script” action (I recommend ignoring the results in the latter) and see if that doesn’t do the trick.

The suggested method will work with simple websites – no paywalls, no login, etc. It will not grab images or most styling – e.g., external css files. CURL syntax is very complex and almost any grab is possible – with effort (sometimes lots of effort).

With the limitations @korm noted in mind, the best option is likely the application SiteSucker.

http://ricks-apps.com/osx/sitesucker

It’s also worth pointing out that in Safari users can Option-Click a link to download the linked page. I don’t know if other Mac browsers offer similar functionality.

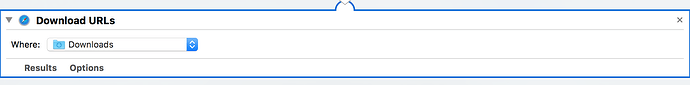

A post was split to a new topic: Create Web Archives (Download Web Page) from a List of URLs

Hey ccstone,

great Job. Thank you very much!

That is a very sophisticated macro/script. Well done, sir! ![]()

I'm going to move it to the "Macro Library" and add it to the "Best Macros List", so it will be easier to find.

I have moved Chris' (@ccstone) macro to the "Macro Library":

Hey Mykola,

This won't create a webarchive file, although on first inspection it looks like it can be done with Automator.

Unfortunately I'm not finding a decent example anywhere on the net. If you find one please post it here.

-Chris

The ‘wget’ unix command can download HTML, images, and can follow links I believe. I used it a long time ago, and if I recall correctly you can find it and download it using Mac Ports (or was it the other unix command installer for Mac… I am blanking on the name).

Hey Folks,

Wget is indeed a great utility. It can download a website (or a partial one) and can even relink the HTML to operate properly locally.

As Vince noted it’s not available by default on macOS. You have to install it via MacPorts, Homebrew, or make it yourself from source.

Wget will NOT create a webarchive file though. For that you need something more specific.

In addition to the other methods described above there’s an Unix executable called webarchiver.

It’s several years old, but I just tested it successfully on macOS 10.12.4.

It’s also available through MacPorts.

Usage is very simple:

webarchiver -url <URL> -output <POSIX Path to new file name>

-Chris