Hey gang, new user to KB, but really seeing the power of it so far.

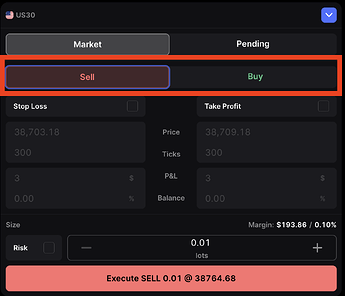

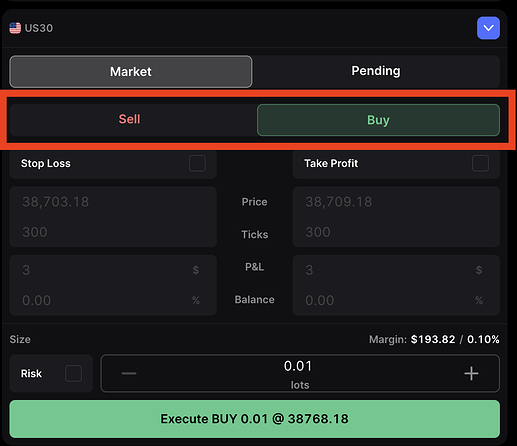

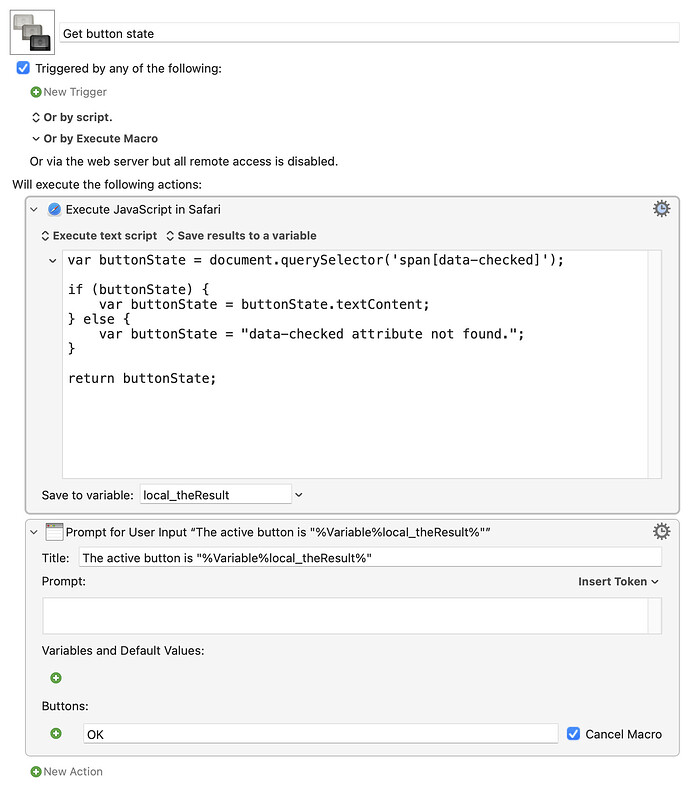

I'm working on some automation, and I want to get the toggle state of a radio button pair on a site (not my own, and it's behind a password), so that I can then perform some other actions elsewhere on my desktop.

Here is the HTML of a "Sell" state, you can see that the 'data-checked' state is being stored in attributes in the div, label, and span elements... that's what tells me which button is active or inactive.

How can I extract this data from the HTML?

<div class="chakra-text css-4zelcx" role="radiogroup">

<label class="css-0" data-checked>

<input id="radio-:rdf:" type="radio" value="sell" name="side" style="border: Opx; clip: rectopx, 0p x, 0px, 0px); height: 1px; width: 1px; margin: -1px; padding: 0px; overflow: hidden; white-space: no wrap; position: absolute;">

<div aria-hidden="true" class="css-u5dp7n" data-checked>

<span class="css-0" data-checked>Sell</span>

</div>

</label>

<label class="css-0">

<input id="radio-:rdg:" type="radio" value="buy" checked name="side" style="border: 0px; clip: rect (0px, 0px, 0px, 0px); height: 1px; width: 1px; mar gin: -1px; padding: 0px; overflow: hidden; white-s pace: nowrap; position: absolute;">

<div aria-hidden="true" class="css-14g0qmu">

<span class="css-0">Buy</span>

</div>

</label>

</div>