I think I'd tackle this problem in a different manner, using one of two approaches.

Approach one is to use a backup app to handle your backups—that's what they're for, and they're incredibly good at it, and require almost no effort on your part to use well. Personally, I use CarbonCopyCloner.

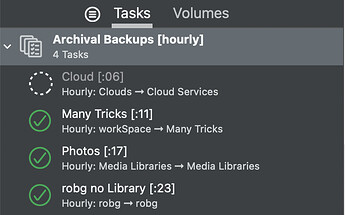

I created a task group that contains all of my "critical" backups, and that task group runs each task in the group once per hour, at the minutes past the hour shown in the name:

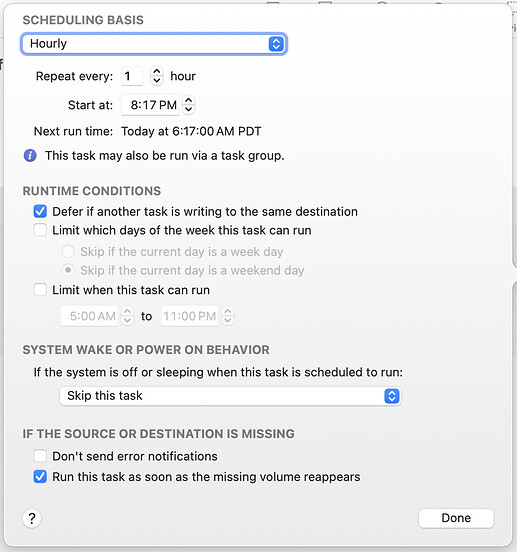

The cloud backup was running when I took that screenshot. It's normally not onscreen in this huge manner; just in the menu bar and I have it show a progress window in the corner while it's backing up. There's great control over the scheduling, too:

I could write a Keyboard Maestro macro that emulates much of this behavior, but it would be a huge task and I'd spend more time troubleshooting it than it's worth—and it would still fail under conditions that a backup app will handle.

If you don't want to invest in more software, though, then I'd recommend replacing 99% of your macro with one shell script command, rsync. Here's the top of its man page:

rsync is a fast and extraordinarily versatile file copying tool. It can copy locally, to/from another hos over any remote shell, or to/from a remote rsync daemon. It offers a large number of options that control every aspect of its behavior and permit very flexible specification of the set of files to be copied. It is famous for its delta-transfer algorithm, which reduces the amount of data sent over the network by sending only the differences between the source files and the existing files in the destination. Rsync is widely used for backups and mirroring and as an improved copy command for everyday use.

ㅤ

rsync finds files that need to be transferred using a "quick check" algorithm (by default) that looks for files that have changed in size or in last-modified time. Any changes in the other preserved attributes (as requested by options) are made on the destination file directly when the quick check indicates that the file's data does not need to be updated.

It's a very powerful program, and the man page includes extensive examples, including this one:

rsync -avz foo:src/bar /data/tmp

ㅤ

This would recursively transfer all files from the directory src/bar on the machine foo into the /data/tmp/bar directory on the local machine. The files are transferred in archive mode, which ensures that symbolic links, devices, attributes, permissions, ownerships, etc. are preserved in the transfer. Additionally, compression will be used to reduce the size of data portions of the transfer.

(The -a flag is the important one there, as it's what sets "archive mode," which keeps everything the same as it copies.)

But you don't have to transfer from another machine; leave out foo: and make it look like this:

rsync -avz /path/to/folder/to/back/up /path/to/backup/folder

And that's your entire macro. Put it in as a shell script action on a periodic trigger, and it will only copy files that have changed since the last time you ran rsync. I still use rsync as a backup tool myself, because I use it to copy files off our web server to local backups.

One very important note: The version of rsync bundled with macOS is very old:

/usr/bin/rsync --version

rsync version 2.6.9 protocol version 29

Copyright (C) 1996-2006 by Andrew Tridgell, Wayne Davison, and others.

If you're going to take this approach, use Homebrew or MacPorts to install a much newer and better version:

$ rsync --version

rsync version 3.3.0 protocol version 31

Copyright (C) 1996-2024 by Andrew Tridgell, Wayne Davison, and others.

-rob.