For those of you writing JXA scripts, how are you handling reusable code "libraries"? Especially in code you might share with others.

For example, let's say I have something called "FileUtils", which I reuse in many places. Right now I just copy it into the JXA script I'm working on. It's kind of a ghetto way of reusing code, but it is what it is.

For me, each set of reusable code is in its own class, generally with static methods. This acts like a namespace. Here's a trimmed-down example:

class FileUtils {

static readTextFile(path) {

// ...

}

static writeTextFile(text, path) {

// ...

}

}

const text = FileUtils.readTextFile("/Path/To/Text/File");

The downside of this is when you have a class with a lot of members - you don't want to copy the entire thing into each JXA script.

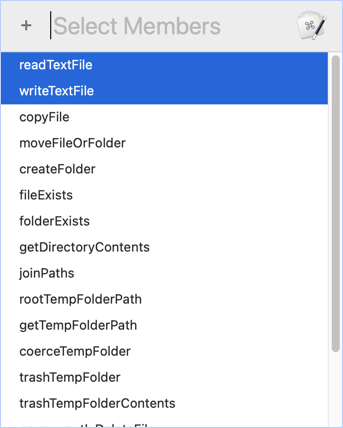

So I came up with a way to let me select which members I want in the class when I paste the code in. It's multi-select, and if you select something that requires one of the other members, they get included automatically:

...which would give me:

class FileUtils {

static readTextFile(path) {

// ...

}

static writeTextFile(text, path) {

// ...

}

static fileExists(path) {

// ...

}

static #fileOrFolderExists(path) {

// ...

}

static #getNSErrorMessage(nsError, message) {

// ...

}

}

It's a fairly involved method to implement, but it's really simple to use.

So how do you guys handle this? I'm hoping someone will come along and show me a much better way.