Try this at the start of your macro:

Thanks, but then I don't get the return if it's not a GPT window or tab – as I now see DanThomas has noted.

You should put the macro in its own group, then set the group like this:

I never noticed these Available in all windows / Enabled when a focused window [...] options, and I wonder how far back in the KM history they go. If all the way, I'm the more embarrassed.

That said, my KM Groups panel is as crowded as my (undesiredly notched, grr-r) menu bar, so I'll put this condition in the macro itself if I can, which I assume is possible even though I think GPT and I were trying this and it wasn't working somehow. (This has been, for a while now, one of those cases where much more time and trouble is put into elaborating a working macro than is saved by using it, but I'm sure everyone here knows the pleasure of finally getting it going whatever it takes. I'm hoping to visit Peter Lewis in heaven, by the way, as I'm sure he's going to have an absolutely fabulous pad there.) [...] But no, the AI chat doesn't include anything about the KM FrontBrowserURL token, so I think that's my salvation.

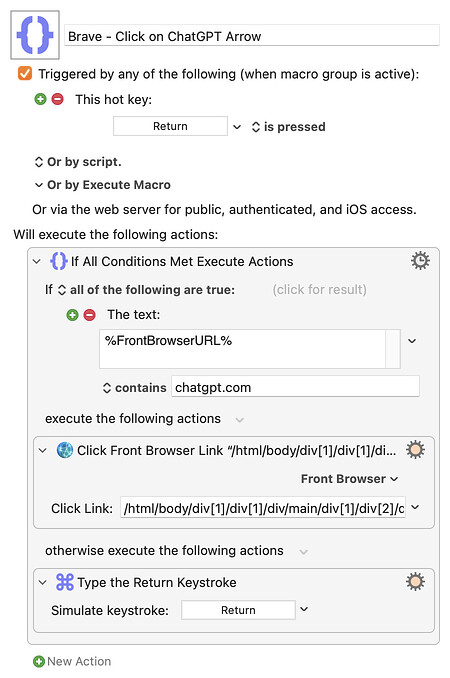

And it is. Here's my macro. Thanks, everybody!

Brave - Click on ChatGPT Arrow.kmmacros (3.4 KB)

I now see DanThomas has apparently disapproved this saying you can't guarantee the simulated key will work, but it's working for me here so I'm not going to worry about it unless at some point it doesn't.

Just one question: GPT affably asked me to send it the macro that worked, and I again suffered having forgotten how to export the macro in text-only format. So I manually typed it thus:

If all of the following are true:

The text:

%FrontBrowserURL%

contains chatgpt.com

execute the following actions

Click Link: /html/body/div[1]/div[1]/div/main/div[1]/div[2]/div[1]/div/form/div/div[2]/div/div/button

otherwise execute the following actions

Simulate keystroke: Return

I know I used to be able to do this, but I can't remember how and it doesn't appear to be provided for in the KM File > Export options. What it may be is that in those cases I just copied the actions in the macro and this worked because they were all simple one-step actions, and it didn't work now because the If Then Else action is more complex. But if there's some way to get the entire text of such an action/macro I'd like to know what it is. Thanks again.