I have used Keyboard Maestro to create a faux operating system that runs on my mum's TV. Using a Stream Deck, she can now watch films, play music, make phone calls, by pressing one of the 10 buttons on the SD. I've got it working pretty well, but there's a lag (almost 2 seconds) on all the actions and I want to figure out how to make the code more efficient. I'm on an M1 32GB, the delay is around 2seconds.

I've looked in the forum and am finding mixed results that I'm not sure apply to my case. I'm wondering if you guys could help with some consolidated tips on how to optimize my workflow?

Amount of Macros

- There are around 10 "top level" macros that get called by the Stream Deck.

- Under the hood there's an additional 50 macros that get called in the background.

- I can't say for sure but I think the nesting of macros calling other macros might go 5-7 levels deep at times.

- Despite this, my Keyboard Maestro Macros.plist is only 300kb

BACKGROUND

Reason for all this over-complexity is to make the front-end feel like a TV experience as possible, meaning everything happens fullscreen, never shows the OS interface, jumps between opening videos in VLC, music in Spotify, Facetime calls, webpages in Chrome.

Consider this is for someone with Dementia, so in between each "scene change" I have assistive full-screen messaging and voice feedback.

Lastly, it's entirely controllable with the Stream Deck, no mouse or keyboard needed.

That's a bit to explain why I've ended up with a total of 62 macros instead of simply 10.

Some optimising I've done already:

For example I have around 5 txt files being written/read by bash/applescripts (things like generating a list of all files in the Films folder). In the early days I started out by reading/writing these text files each time a macro was called, but have since optimised it by putting a great part of that functionality into scheduled macros that get called once a day, leaving a skinnier portion of the code to be executed each time "Play a random Film" gets called. This improved execution time. Now I'm wondering what else I could look into.

Bash + Applescripts

I have several Bash and Applescripts.

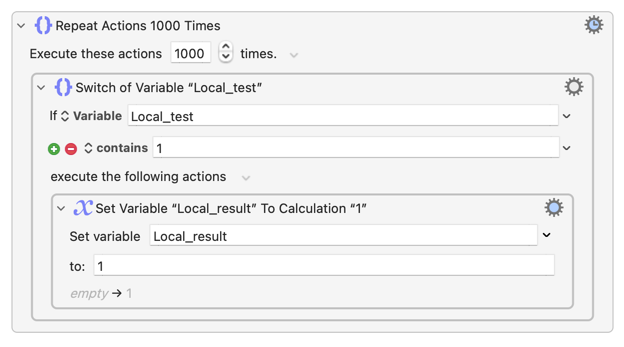

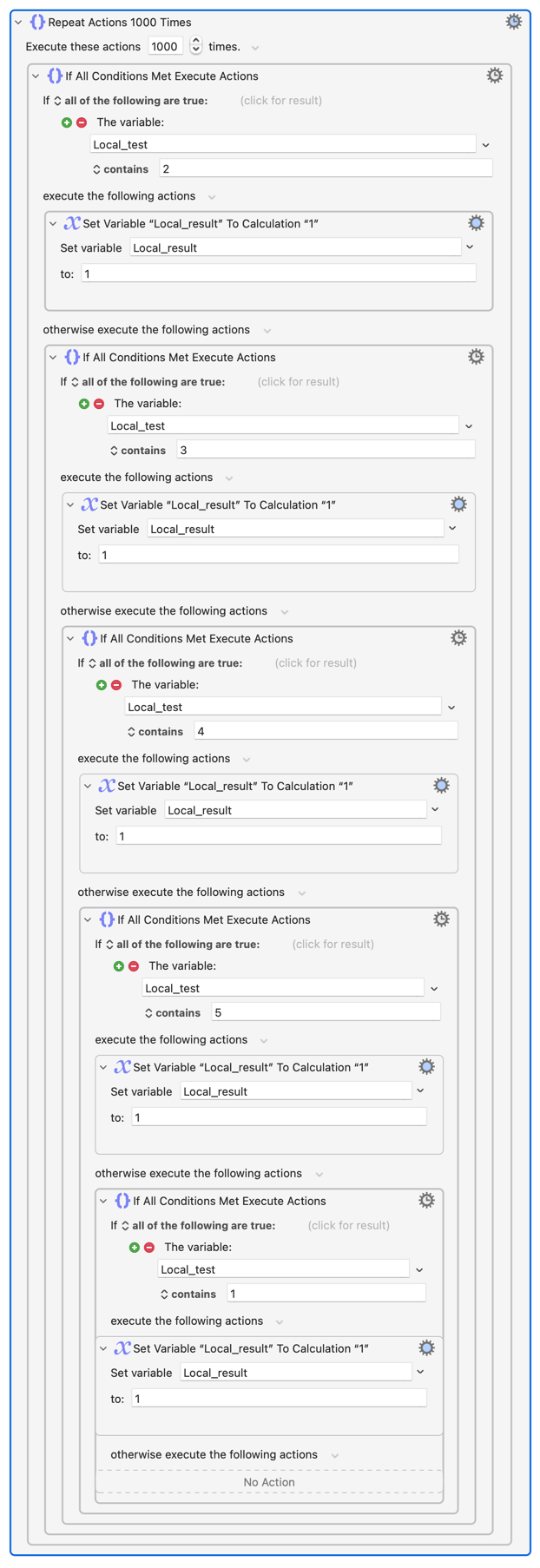

Some of are checking app statuses, like tell application "Spotify" if player state is playing then return "playing" else return "not playing" end if end tell which I believe adds time. I've disabled some of these and replaced them with an annoyingly complex system of manually-created variables that I got the system to manage. However this has increased the amount of IF statements and calculations needed.

- How does KM's native IF statements compare with checking app statuses via applescript?

DEBUG SUBROUTINE

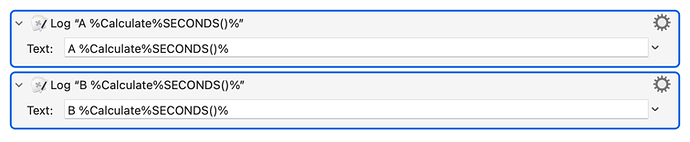

I also have Debug subroutine that gets called pretty much from every single macro, multiple times, appending new lines to a log file. Inside it is this bash script:

Debug BASH

#!/bin/bash

# Config

LOG_DIR="$KMVAR_Config_HomePath/Engine/KM"

LOG_FILE="$LOG_DIR/debug.log"

MAX_SIZE=1048576 # 1MB in bytes

BACKUP_COUNT=50 # Number of old logs to keep

short_user="${KMVAR_Config_User:0:1}"

Debug_ExecutingMacro=$KMVAR_Debug_ExecutingMacro

# Function to rotate logs

rotate_log() {

# Shift old logs

for i in $(seq $((BACKUP_COUNT-1)) -1 0); do

if [ -f "${LOG_FILE}.$i" ]; then

mv "${LOG_FILE}.$i" "${LOG_FILE}.$((i+1))"

fi

done

# Move current log to .0

mv "$LOG_FILE" "${LOG_FILE}.0"

touch "$LOG_FILE"

}

# Check file size and rotate if needed

if [ -f "$LOG_FILE" ]; then

size=$(stat -f%z "$LOG_FILE")

if [ $size -gt $MAX_SIZE ]; then

rotate_log

fi

fi

# previous time:

debug_timestampLast="${KMVAR_debug_timestampLast:-0}"

# current time:

debug_timestampString="$(/usr/local/opt/coreutils/libexec/gnubin/date '+%y/%m/%d %H:%M:%S.%3N')"

debug_timestamp="$(/usr/local/opt/coreutils/libexec/gnubin/date '+%s.%3N')"

# calc time passed (with check for first run)

if [ -z "$debug_timestampLast" ]; then

time_diff="0.000"

else

time_diff=$(echo "$debug_timestamp - $debug_timestampLast" | bc)

fi

# Update the debug_timestampLast variable

osascript <<EOF

tell application "Keyboard Maestro Engine"

setvariable "debug_timestampLast" to "$debug_timestamp"

end tell

EOF

# Append new log entry (using standard ASCII characters)

echo "__________

[$short_user], $debug_timestampString, delay: $time_diff, [$Debug_ExecutingMacro],

$KMVAR_mylocal_txt

" >> "$LOG_FILE"

I tried disabling this macro (by adding a Return action at the top, because actually disabling it didn't prevent it from being executed) and it sped up performance by quite a bit. However I'd like to keep it for debugging purposes. Is there anything in there that is particularly memory-intensive? Timestamps? Formatting?

Any tips on how to store logs for debugging purposes without cramming the memory? I find them super hard to read, hence the additional formatting and variables.

(To be honest, in writing this part of this post, I just figured out that this Debugger is indeed the biggest culprit in my workflow. I'll keep the rest of the post as is, just because I'd still like to understand)

Some other questions that come to mind

- Is reading/writing to internal variables more efficient than text files?

- I have an additional 15 text and log files being written (no reading) for logging purposes by various bash/applescripts. Is appending single lines to a text file a memory-chugger or is it minimal?

- Disabled actions inside an active Macro: do they add to memory?

- Does having [2 Macros calling 1 Subroutine with a bash script] weigh more than [2 Macros each with its own copy of the same identical bash script]?

Any other tips, or links that I should read, feel free!

Many thanks