Is there a more efficient way to simulate function pointers (i.e. calling a Macro by name stored in a variable) in KM scripting than to use AppleScript? I'm guessing that switching to AS would slow things down a little. Or…would it?

If you would like to measure efficiency, then why not take a measurement by using the MS function before and after you try doing this function via AppleScript? If it's fast enough, the question becomes moot. How fast is fast enough for you?

It's not efficiency that makes me want the same thing that you do - it's simplicity and readability. But I get the impression that not enough people have called for this feature yet. I also want this feature for variable names.

Hi @Sleepy,

Thank you for your reply. I believe it would take too much time for me to evaluate efficiency through trial and error. Peter has so spectacularly enhanced and built out KB to the point that it is a full-fledged development environment…quite a peculiarly wonderful one. While I have some training and experience in such things, I'm at a loss as to how to code efficiently in…I suppose, let's call it Keyboard Maestro. At least it's not a pun, like C++. Or a bad one, like C#.

Is there already an existent Best Practices…Dennis Ritchie, what are you up to, these days?

I can only guess (but I hope I'm correct) that each KM action (and KM itself) has been implemented as efficiently as possible. In my use of KM the only "bottleneck" if you can call it that is the interaction with the other apps that I want KM to automate for me. So, for example, the speed at which KM can issue the command to Safari to open a URL is irrelevant because it may take Safari much time to load that URL - so much time in fact that I have to program in pauses into my macro to wait for Safari to complete.

To address your original question, I've been executing macros whose names are stored in KM variables for literally years using a simple AppleScript encapsulated into a KM Third Party Plug In Action.

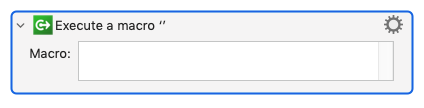

When you add this action to your macro it looks like this:

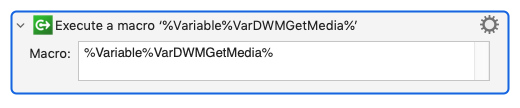

Here's an example of it in use in one of my macros:

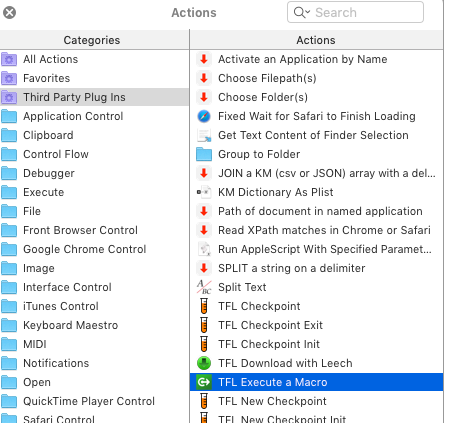

And this is the Plug In as it appears in the KM Action List:

If you're interested, here's the plug in action itself, ready to be installed in KM:

TFL Execute a Macro.zip (443.7 KB)

If you don't know how to install a Third Party Plug In Action, just download the zip file and then drag it from Finder onto the KM icon in the dock - or just ready about it here manual:Plug In Actions [Keyboard Maestro Wiki]

As with any software, YMMV and I bear no responsibility for its use or misuse!

Hey Bill - if you look around the forum there are a few regular and frequent contributors who between them could write the best practices book on KM. I’ve been learning from them for a long time!

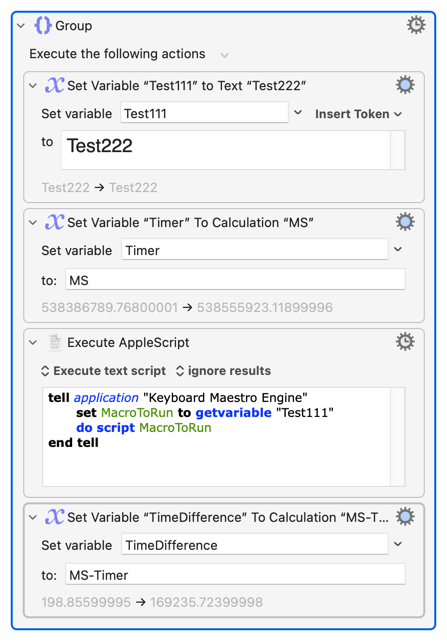

You are welcome. I see you have no idea how to evaluate the time an action takes, so I'll include a screenshot below to show you how I did it (ie, how I tested your question) in a couple of minutes. Here it is... (if you look in the bottom of the last action, you can see the time difference was 198 milliseconds. In other words, calling an AppleScript action which did exactly what you wanted took 0.2 seconds. Is that not efficient enough for you? I admit, it's kind of slow.

Thank you thank you thank you @tiffle and @Sleepy – the best part of KM isn't even the macros, its the people you meet along the way.

Thank you for running that, @Sleepy (and @tiffle). It's usually the algorithm that determines the run time—how many operations are required in relation to the amount of data. For example, a "set variable" action always takes the same amount of time, regardless of the value in the variable. So that's "Constant" time.

But if you have a loop, then there must be an "if-then" for each iteration—so your code runs the same number of times (maximum) as the number of loops. That's "Linear" time—as the amount of data goes up, the execution time goes up by the same amount.

From there, we have polynomial time, NP-complete, exponential time, until we arrive at non-touring decidable operations, which can never be computed on any machine that can ever be built in our universe.

But some things are so slow—they take so many operations—that even though a computer could solve them, there wouldn't be enough time in the universe for a computer to solve it—no matter how fast that computer is. An example is chess–predicting every possible move. You can do that if you look up to 12 moves ahead. After that? The universe will end before the programme ends.

This is the mathematics of Complexity Theory.

Complexity Theory applies to KM because it (KM) is equivalent to a Turing machine—the highest level of problem-solving device that can exist in the universe which can be created (by mortals).

The problems solvable by KM, and a computer the size of a planet are exactly the same.

And strange as it may seem, if you built a computer that ran 1,000,000,000,000,000,000,000 times faster than KM…it wouldn't really be any faster. Most algorithms are polynomial or exponential in time complexity. And they requires so may operations, that as long as each operation takes some amount of time, there's really no difference in execution time. It's like @tiffle was saying about waiting for inter-applicaiton communication.

When writing compilers, you want to target the specific optimisations that a chip has. For example, chips have on-board branch-prediction—it guesses when a loop will break, and it's usually right…this saves a lot of time in programme execution.

When you write your code, in turn, you write for the compiler. There may be an urge to write for the processor, but 99.99999% of the time, the compiler does a better job. (This is the answer to my question, I realised…I'll come back to that if for some reason anyone is still reading this.)

So, if you write in an interpreted language, you write for the interpreter.

And if you write in a meta-interpreted language (like KeyboardMeastro), then you write for the interpreted scripting language (AppleScript), which writes for the procedural language (Objective C), which writes for the compiler, which writes for the assembler, which writes for the microprocessor.

Because there's such a long journey (and we're skipping micro code, the OS, JIT compilers, etc.), it's challenging in meta-scripting languages to determine best-coding practices, because you need to know how all of those other things work (within a given time-complexity class).

So, if I'm writing in AppleScript, I can make educated guesses about how to best things, depending on whether it's "compiled" or not…but really, I need to target the interpreter that Apple's running, and I don't know much about it—just the how it would need to target the Mach kernel. So I'm trying to pass function pointers in an interpreted automation language.

Peter uses many clever optimisations in KM because he does know that territory. So, to answer my own question, the best coding practices are generally to use the language as it was designed by its creator. And let the language (compiler, interpreter, etc.) just do its thing.

To put it another way, if KM isn't fast enough to do what I want, the problem is that I'm misusing the language—it's not a limitation of the language.

That being said, I would guess that avoiding raw AppleScript is a good idea when writing KM macros—best to let Keyboard Maestro make those translations.

This is a cool little tool - thanks!